Moral Machine: The AI Dilemma of Life and Death Choices

The Moral Machine is a thought-provoking experiment that challenges our ethical frameworks in the face of rapidly evolving technology. In scenarios where a self-driving car’s brakes fail, users are faced with impossible choices: Should the car swerve to avoid a group of pedestrians, potentially killing the passengers? Or should it stay its course, sacrificing the passengers to save the pedestrians?

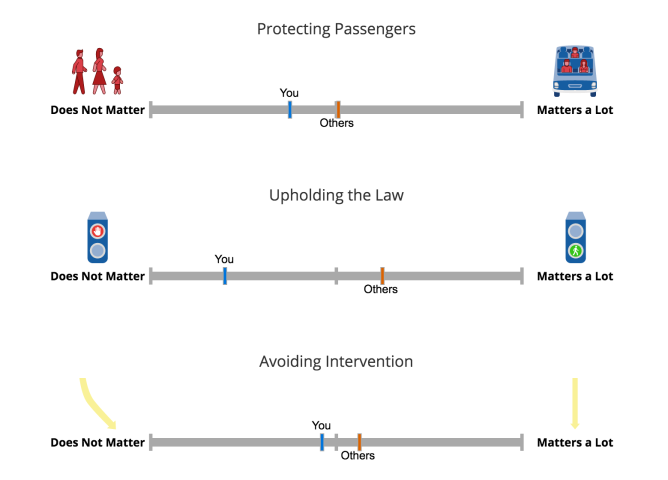

This game forces us to confront the ethical dilemmas that arise when machines are tasked with making life-or-death decisions. The traditional Trolley Problem, a thought experiment in ethics, is at the heart of the Moral Machine. It explores the moral implications of sacrificing a few to save many. But with self-driving cars, this problem becomes more complex. Who is ultimately responsible for the decisions made by the AI? The passengers who knowingly entered the vehicle, the company that created the AI, or the AI itself?

The scenarios in the Moral Machine extend beyond the traditional Trolley Problem, introducing nuanced factors such as age, gender, and even the presence of animals. These varied scenarios demonstrate the complexities of ethical decision-making, particularly in situations where human life is at stake.

The Moral Machine’s impact extends beyond the realm of thought experiments. As self-driving cars become more prevalent, the questions raised by this game will have tangible real-world consequences. Engineers are currently developing AI systems that are capable of making critical decisions in autonomous vehicles. The ethical frameworks that inform these decisions will be crucial in determining the future of self-driving cars and their integration into our society.